Introduction

Music has been an integral part of human civilization, profoundly expressing creativity, emotion, and culture. From the rhythmic beats of ancient rituals to the complex harmonies of modern-day performances, music has evolved alongside humanity, reflecting artistic trends and social and technological changes.

At the heart of both music and technology lies the concept of synchronizationThe coordination of simultaneous processes or events to operate in unison. This concept is not limited to music; it is a fundamental principle observed in various aspects of life:

- Walking Together: People unconsciously adjust their pace to match each other's steps

- Conversation: Speakers synchronize their speech patterns and rhythms for fluid communication

- Computer Networks: Multiple machines coordinate to work together efficiently

- Traffic Systems: Traffic lights are synchronized to optimize flow and safety

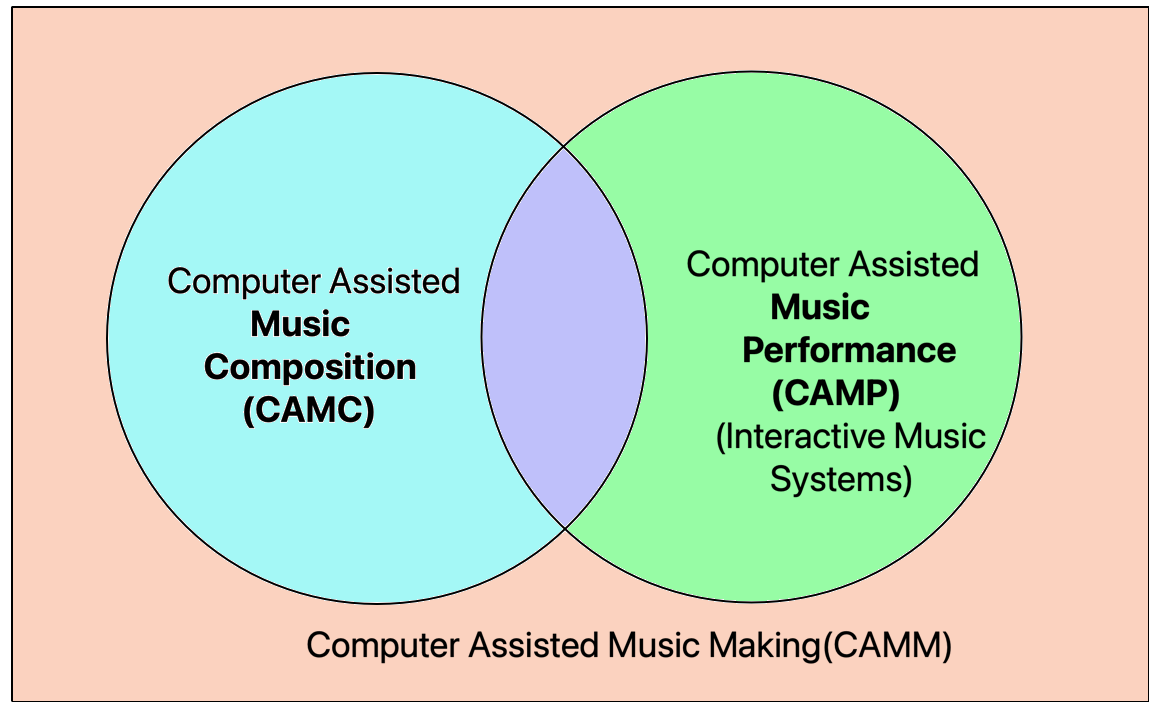

Computer Assisted Music Making (CAMM)

CAMMComputer Assisted Music Making - the integration of technology in music creation, helping musicians in both composition and performance represents the integration of technology in music creation, helping musicians in both composition and performance. CAMM can be divided into two overlapping categories:

🎼 Computer Assisted Music Composition (CAMC)

Tools and systems designed to support the composition process, utilizing algorithmsA set of rules or instructions given to a computer to help it solve problems or complete tasks and artificial intelligence to suggest or refine compositions.

🎪 Computer Assisted Music Performance (CAMP)

Focuses on real-timeProcessing or responding to data immediately as it is received, without delay enhancements to live or recorded performance, including interactive systems that respond to a performer's movements.

Key Musical Terms

To fully understand the importance of synchronization in music, several key musical terms are essential:

BeatThe basic unit of time in music, providing the pulse that musicians follow

The basic unit of time in music, providing the pulse that listeners often clap or tap along to.

TempoThe speed or pace of music, usually measured in beats per minute (BPM)

The speed at which music is played, usually measured in beats per minute (BPM).

RubatoA musical technique where the performer slightly speeds up or slows down for expressive effect

A technique where performers subtly vary tempo for expressive purposes.

Rhythm

The pattern of sounds and silences in music, providing the framework for movement and timing.

Dynamics

Volume levels in music, ranging from soft (piano) to loud (forte), adding emotional depth.

Polyrhythm

Using contrasting rhythms that require synchronization of complex structures.

The Evolution of Automated Musical Systems

Early Mechanization and the Birth of Automated Music

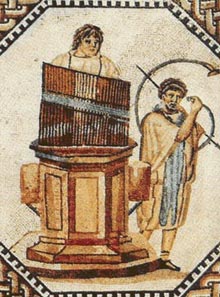

The concept of automated music dates back to ancient times. One of the earliest examples is the water organ (hydraulis) from ancient Greece, invented in the 3rd century BCE.

The Rise of Mechanical Instruments: 17th to 19th Centuries

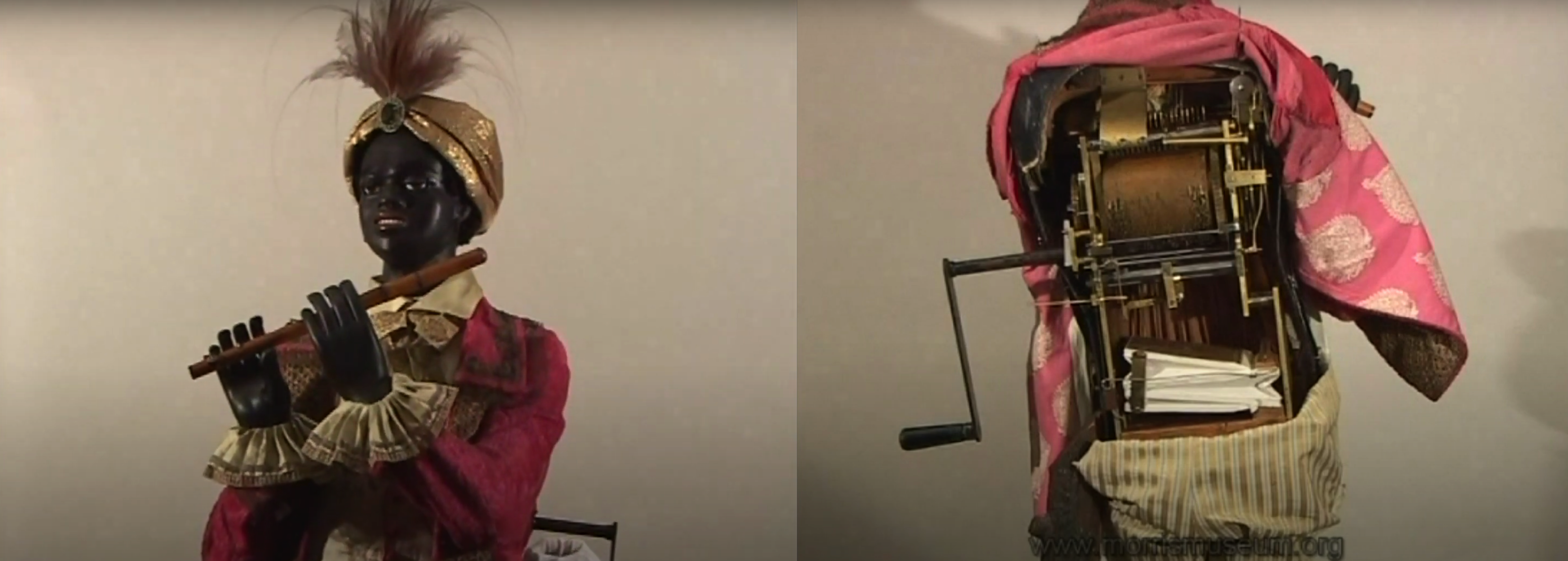

The 17th and 18th centuries marked significant advancements in mechanized music, including:

- Musical clocks (carillon clocks) - Played pre-programmed music on bells and chimes

- Mechanical flute player by Jacques de Vaucanson - Mimicked human-like techniques

- Player piano (pianola) - Used perforated paper rolls to encode music

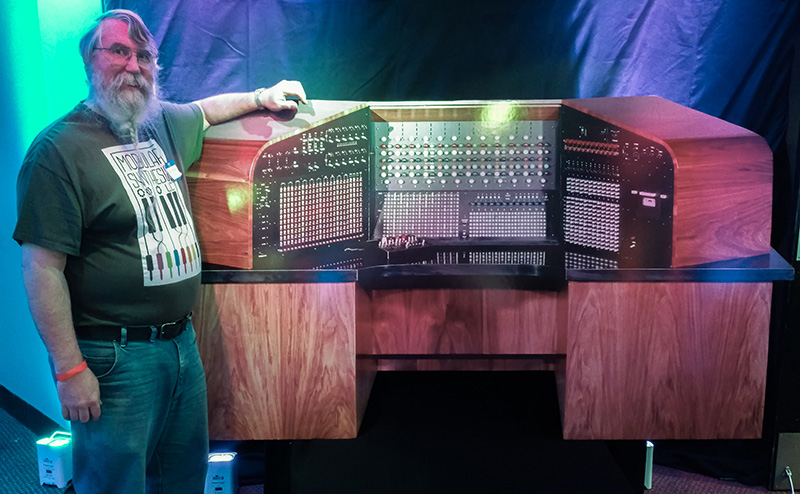

Electromechanical and Early Electronic Systems: 20th Century

The 20th century brought revolutionary changes with the introduction of electronic instruments:

The Digital Revolution: Late 20th Century

The late 20th century brought the digital revolution, transforming music automation through:

- MIDIMusical Instrument Digital Interface - a technical standard that describes a communications protocol for electronic musical instruments Protocol (1983) - Enabled communication between electronic instruments and computers

- Sequencer Software - Programs like Cubase revolutionized home and professional studios

- Algorithmic Composition - Composers like Iannis Xenakis experimented with computer-generated music

- Early Robot Musicians - WABOT-2 could read musical scores and play keyboard

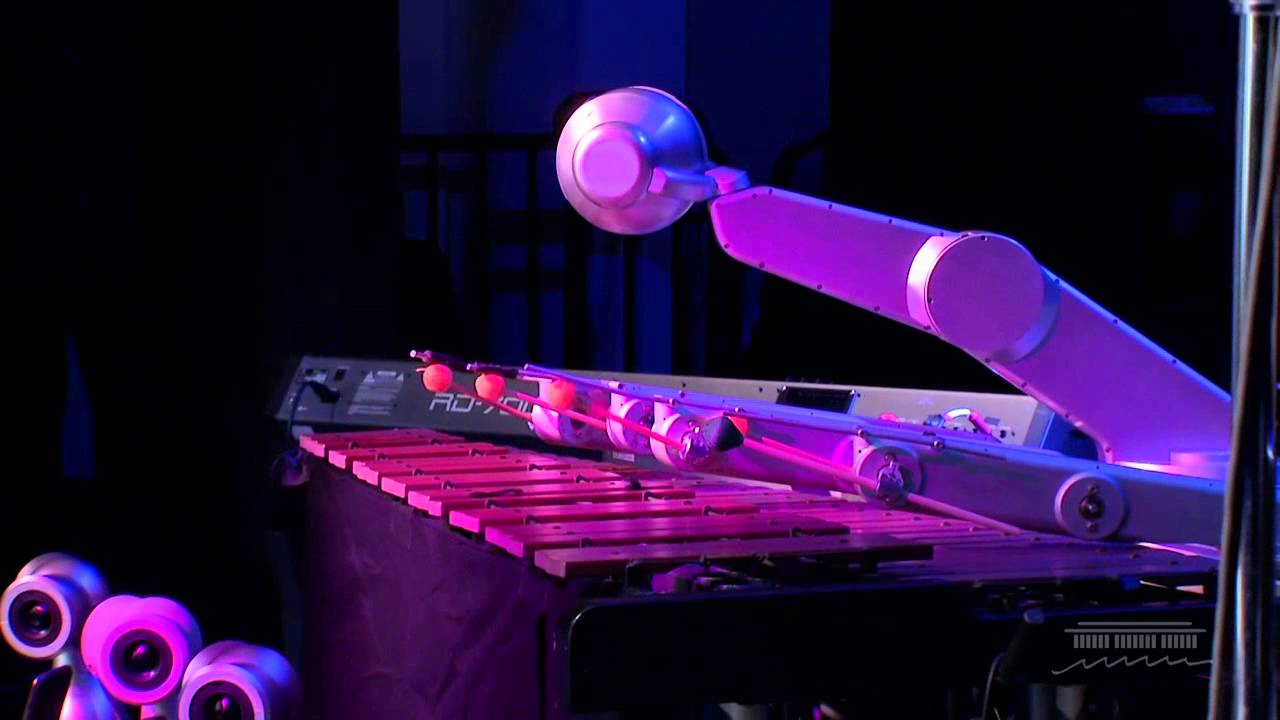

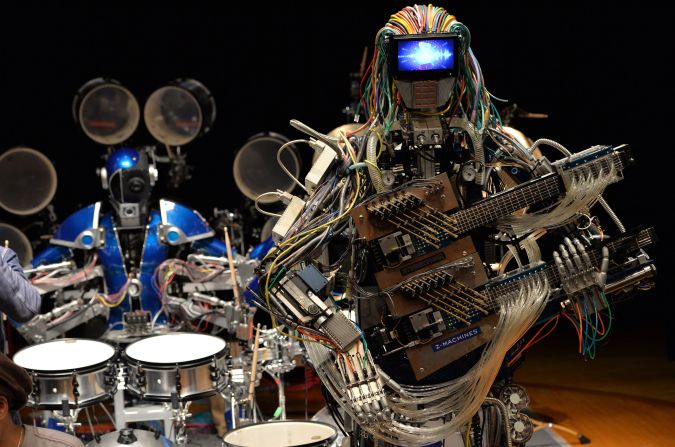

Modern Robotics and AI in Music: 21st Century

The 21st century has witnessed the emergence of sophisticated robotic musicians and AI integration:

Machine Learning Techniques for Synchronization

Modern synchronization systems leverage advanced machine learningA type of artificial intelligence that enables computers to learn and improve from experience without being explicitly programmed techniques:

CNNsConvolutional Neural Network - a deep learning algorithm particularly effective for analyzing visual imagery

Used for gesture recognition and facial expression analysis in visual synchronization.

LSTMsLong Short-Term Memory - a type of recurrent neural network capable of learning long-term dependencies

Handle sequential data and predict timing changes in musical performances.

Deep LearningA subset of machine learning using neural networks with multiple layers to model and understand complex patterns

Enables integration of multimodalUsing multiple modes or methods of input/output, such as combining audio, visual, and gestural data data sources for comprehensive synchronization.

| Era | Key Developments | Examples |

|---|---|---|

| Early Mechanization | Rudimentary machines producing autonomous sound | Water organ (hydraulis) |

| 17th-19th Centuries | More sophisticated mechanical instruments | Musical clocks, barrel organs, player pianos |

| Early 20th Century | Introduction of electromechanical instruments | Telharmonium, Hammond organ, Theremin |

| Late 20th Century | Digital revolution, MIDI, algorithmic composition | Digital synthesizers, computer-generated music |

| 21st Century | AI and robotics in music | Robotic musicians, AI composition systems |

Synchronization in Human-Robot Musical Interaction

The Central Challenge

As automated musical systems evolve from simple mechanization to sophisticated robotic performers, synchronization emerges as the central challenge. Unlike systems that merely play back pre-recorded music, human-robot musical interaction demands sophisticated synchronization that encompasses both technical precision and musical expression.

Defining Synchronization in Human-Robot Musical Ensembles

Synchronization in musical performance is the process of aligning timing and rhythmic elements among multiple performers to produce a cohesive outcome. In human-robot ensembles, this extends beyond mechanical timekeeping.

The Dual Nature of Synchronization

⚙️ Technical Precision

The robot's capacity to execute musical events with accurate timing, pitch, and dynamics. Many robotic musicians excel at maintaining consistent tempo.

🎨 Musical Expression

Emerges from nuanced variations in timing, dynamics, and articulation. Humans naturally introduce micro-timing shifts and adjust their playing.

Challenges and Approaches

Temporal Precision and Real-Time Adaptation

A central challenge is aligning musical events with the micro-timing fluctuations characteristic of human performances. Real-timeProcessing or responding to data immediately as it is received, without delay tempo tracking enables robots to continuously estimate and adjust to the ensemble's evolving speed.

Dynamic Interaction and Predictive Modelling

Synchronization is inherently interactive. Robotic performers must anticipate fluctuations using predictive algorithmsA set of rules or instructions given to a computer to help it solve problems or complete tasks trained on historical performance data.

Minimizing Latency

Latency—the delay between sensing musical input and executing a response—must be minimized for robots to match human response times. Any significant delay can disrupt ensemble cohesion.

Single-Mode vs. Multimodal Synchronization

Multimodal Sensor Fusion

Sensor fusion integrates data streams from microphones, cameras, and motion sensors, enabling robots to track tempo, conductor gestures, and performers' movements simultaneously. However, this poses challenges:

- Data Rate Mismatch: Audio signals have higher sampling frequencies than visual feeds

- Latency and Noise: Each sensor introduces its own delay and noise profile

- Dynamic Weighting: Adaptive algorithmsA set of rules or instructions given to a computer to help it solve problems or complete tasks must assign weights based on context

Thesis Goals

This thesis develops an integrated framework to synchronize human musicians and robots, enabling dynamic, expressive, and adaptive musical interactions.

Key Objectives

🔄 Multimodal Framework

Develop a system that integrates audio, visual, and gestural inputs for musical synchronization using machine learningA type of artificial intelligence that enables computers to learn and improve from experience without being explicitly programmed techniques.

🔮 Predictive Modeling

Create and refine deep learningA subset of machine learning using neural networks with multiple layers to model and understand complex patterns models to predict musical parameters such as tempo, dynamics, and expressive timing.

📚 Continuous Learning

Incorporate continuous learning mechanisms using real-timeProcessing or responding to data immediately as it is received, without delay feedback from human musicians.

🎭 Scalability Testing

Evaluate the system across diverse musical contexts—different ensemble sizes, genres, and acoustic environments.

Thesis Structure Overview

| Chapter | Focus | Key Contributions |

|---|---|---|

| 1 | Introduction and Overview | Context for research gaps and objectives |

| 2 | Literature Review | Identifies gaps in multimodal integration |

| 3 | Cyborg Philharmonic Framework | Novel multimodal synchronization framework |

| 4 | LeaderSTeM | LSTM-based leader identification |

| 5 | Visual Cues | Real-time expressive synchronization |

| 6 | Multimodal Synchronization | Experimental evaluation across contexts |

| 7 | Implementation | Continuous learning with user feedback |

| 8 | Conclusion | Future research directions |